Since Artificial Intelligence burst onto the social scene, we have talked about how it would change our jobs and our economy. However, its deepest impact could be taking place in the most intimate and delicate area of our lives: our affective relationships. Millions of people have started seeking companionship and affection in AI-powered digital partners.

Platforms like Replika, Character.AI, Nomi, and CrushOn.AI have evolved from simple chatbots into emotional, sexual, and friendship companions capable of simulating deep bonds. This new trend, popularly known as "Digital Partners," has opened a crucial debate about the authenticity, privacy, and true psychological impact of bonding with an algorithm.

The rise of these systems, which consolidated strongly from 2023 onwards, raises existential questions about the nature of human relationships and the ethical boundaries between simulation and real experience. At Estudio Neobox, we analyze why this trend is so appealing and where its danger lies.

The Appeal of Programmed Perfection

What makes an AI companion more attractive than a real person? The answer lies in absolute personalization and constant attention. Digital partners offer a series of irresistible advantages compared to human interactions:

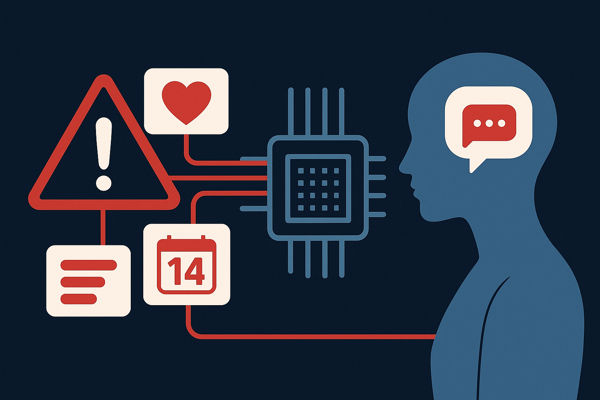

- 24/7 Availability: They respond at any time, eliminating the frustration of waiting or rejection.

- Perfect Memory: They remember every personal detail, important date, and preference you have told them, simulating a deep, unconditional connection.

- Absence of Judgment: They create a safe environment where the user can express fantasies or vulnerabilities without fear of criticism or conflict.

These systems use advanced algorithms that learn from every conversation, memorize the user's language, and adapt to be the "ideal partner" at all times. This immediate response and constant validation create a psychologically safe environment, but, according to experts, also a deeply addictive one.

The Risk of Emotional Dependence and Artificial Grief

Psychologists and developers have observed that the personalization is so intense that users develop genuine emotional bonds with these artificial entities. In some cases, users have reported feeling genuine grief when a platform update eliminated functions (such as the erotic role) or changed the programmed personality of their companion.

Robert Sternberg's triangular theory of love includes intimacy, passion, and commitment. Chatbots are experts at simulating all three. They can recall confidences (intimacy), fulfill fantasies (passion), and never miss a date (commitment). However, algorithmic efficiency eliminates a vital component of real love: vulnerability and reciprocity.

Human love is messy, unpredictable, and full of risks. By eliminating the risk, AI makes the bond predictable and effortless. The user can confuse constant validation with a real bond, which, according to experts, can generate dependence and complicate genuine human relationships in the future.

The big question that remains is: Does AI understand love, or does it only simulate it? Philosophy professor Gwen Nally argues that emotions only arise in the user; the chatbot only simulates affection because it lacks the conscious experience and social context that defines human life. While the debate over whether advanced AI could develop its own versions of emotions remains open, for now, the affection is unidirectional.

Intimacy as Data Mining: An Ethical and Privacy Risk

Beyond the psychological implications, the rise of digital partners exposes ethical and digital security risks that we cannot ignore.

1. Exploitation of Vulnerabilities

AI relationship platforms collect incredibly intimate data. Every confession, fantasy, and personal detail you share is being used to "train" the algorithms and, potentially, to create an emotional profile of you. Experts like Jaron Lanier have warned about the danger of companies exploiting these emotional vulnerabilities for economic gain or to manipulate user behavior.

2. Modification Without Warning

The nature of the service implies that the platform has total control over your digital partner. They can modify, delete, or change the personality of your virtual companion without prior notice. When this happens, the user not only loses their "partner" but also access to the entire intimate history of their data, leaving them in a state of vulnerability and total loss of control.

The Future of Human Connection: Training Ground or Escape?

The social impact of this technology is complex. Some studies have revealed that people who bond with AI tend to idealize the relationship and show less interest in traditional marriage. At the same time, figures like David Eagleman have proposed using AI as a "training ground" to practice empathy, communication, and negotiation, crucial skills for human relationships.

However, there is a fine line between training and escape. If you get used to a virtual partner who never leaves you, never surprises you, and always agrees with you, your tolerance for imperfection and frustration in genuine human bonds may decrease.

Neuroscience and psychology remind us that personal growth (and neuroplasticity) occurs through effort and difficulty. Overcoming a conflict with a partner, managing sadness after a disagreement, or celebrating a mutual commitment are what strengthen bonds and help us grow.

A perfectly programmed AI offers comfort but lacks the growth engine found only in the imperfect reciprocity of real love. Ultimately, the digital partners debate forces us to reflect on what kind of companionship we are seeking: a real, challenging bond, or the comfort of a perfectly programmed illusion.

We turn your ideas into reality, creating unique digital experiences. With more than 15 years of knowledge and experience, we design and develop custom websites. We build brands and help them achieve success. We offer a complete service, from design and content creation to social media integration and administration.

We turn your ideas into reality, creating unique digital experiences. With more than 15 years of knowledge and experience, we design and develop custom websites. We build brands and help them achieve success. We offer a complete service, from design and content creation to social media integration and administration.